Regex¶

In this lesson, we'll learn about a useful tool in the NLP toolkit: regex.

Let's consider two motivating examples:

1. The phone number problem¶

Suppose we are given some data that includes phone numbers:

123-456-7890

123 456 7890

101 Howard

Some of the phone numbers have different formats (hyphens, no hyphens). Also, there are some errors in the data-- 101 Howard isn't a phon number! How can we find all the phone numbers?

2. Creating our own tokens¶

In the previous lessons, we used sklearn or fastai to tokenize our text. What if we want to do it ourselves?

The phone number problem¶

Suppose we are given some data that includes phone numbers:

123-456-7890

123 456 7890

(123)456-7890

101 Howard

Some of the phone numbers have different formats (hyphens, no hyphens, parentheses). Also, there are some errors in the data-- 101 Howard isn't a phone number! How can we find all the phone numbers?

We will attempt this without regex, but will see that this quickly leads to lot of if/else branching statements and isn't a veyr promising approach:

Attempt 1 (without regex)¶

phone1 = "123-456-7890"

phone2 = "123 456 7890"

not_phone1 = "101 Howard"

string.digits

'0123456789'

def check_phone(inp):

valid_chars = string.digits + ' -()'

for char in inp:

if char not in valid_chars:

return False

return True

assert(check_phone(phone1))

assert(check_phone(phone2))

assert(not check_phone(not_phone1))

Attempt 2 (without regex)¶

not_phone2 = "1234"

assert(not check_phone(not_phone2))

----------------------------------- AssertionErrorTraceback (most recent call last) <ipython-input-82-325c150ba276> in <module> ----> 1 assert(not check_phone(not_phone2)) AssertionError:

def check_phone(inp):

nums = string.digits

valid_chars = nums + ' -()'

num_counter = 0

for char in inp:

if char not in valid_chars:

return False

if char in nums:

num_counter += 1

if num_counter==10:

return True

else:

return False

assert(check_phone(phone1))

assert(check_phone(phone2))

assert(not check_phone(not_phone1))

assert(not check_phone(not_phone2))

Attempt 3 (without regex)¶

But we also need to extract the digits!

Also, what about:

34!NA5098gn#213ee2

not_phone3 = "34 50 98 21 32"

assert(not check_phone(not_phone3))

----------------------------------- AssertionErrorTraceback (most recent call last) <ipython-input-85-63ad4ac6a61d> in <module> 1 not_phone3 = "34 50 98 21 32" 2 ----> 3 assert(not check_phone(not_phone3)) AssertionError:

not_phone4 = "(34)(50)()()982132"

assert(not check_phone(not_phone3))

----------------------------------- AssertionErrorTraceback (most recent call last) <ipython-input-86-081f5987a5f8> in <module> 1 not_phone4 = "(34)(50)()()982132" 2 ----> 3 assert(not check_phone(not_phone3)) AssertionError:

This is getting increasingly unwieldy. We need a different approach.

Introducing regex¶

Useful regex resources:

Best practice: Be as specific as possible.

Parts of the following section were adapted from Brian Spiering, who taught the MSDS NLP elective last summer.

What is regex?¶

Regular expressions is a pattern matching language.

Instead of writing 0 1 2 3 4 5 6 7 8 9, you can write [0-9] or \d

It is Domain Specific Language (DSL). Powerful (but limited language).

What other DSLs do you already know?

- SQL

- Markdown

- TensorFlow

Matching Phone Numbers (The "Hello, world!" of Regex)¶

[0-9][0-9][0-9]-[0-9][0-9][0-9]-[0-9][0-9][0-9][0-9] matches US telephone number.

Refactored: \d\d\d-\d\d\d-\d\d\d\d

A metacharacter is one or more special characters that have a unique meaning and are NOT used as literals in the search expression. For example "\d" means any digit.

Metacharacters are the special sauce of regex.

Quantifiers¶

Allow you to specify how many times the preceding expression should match.

{} is an extact qualifer

Refactored: \d{3}-\d{3}-\d{4}

Unexact quantifiers¶

?question mark - zero or one*star - zero or more+plus sign - one or more |

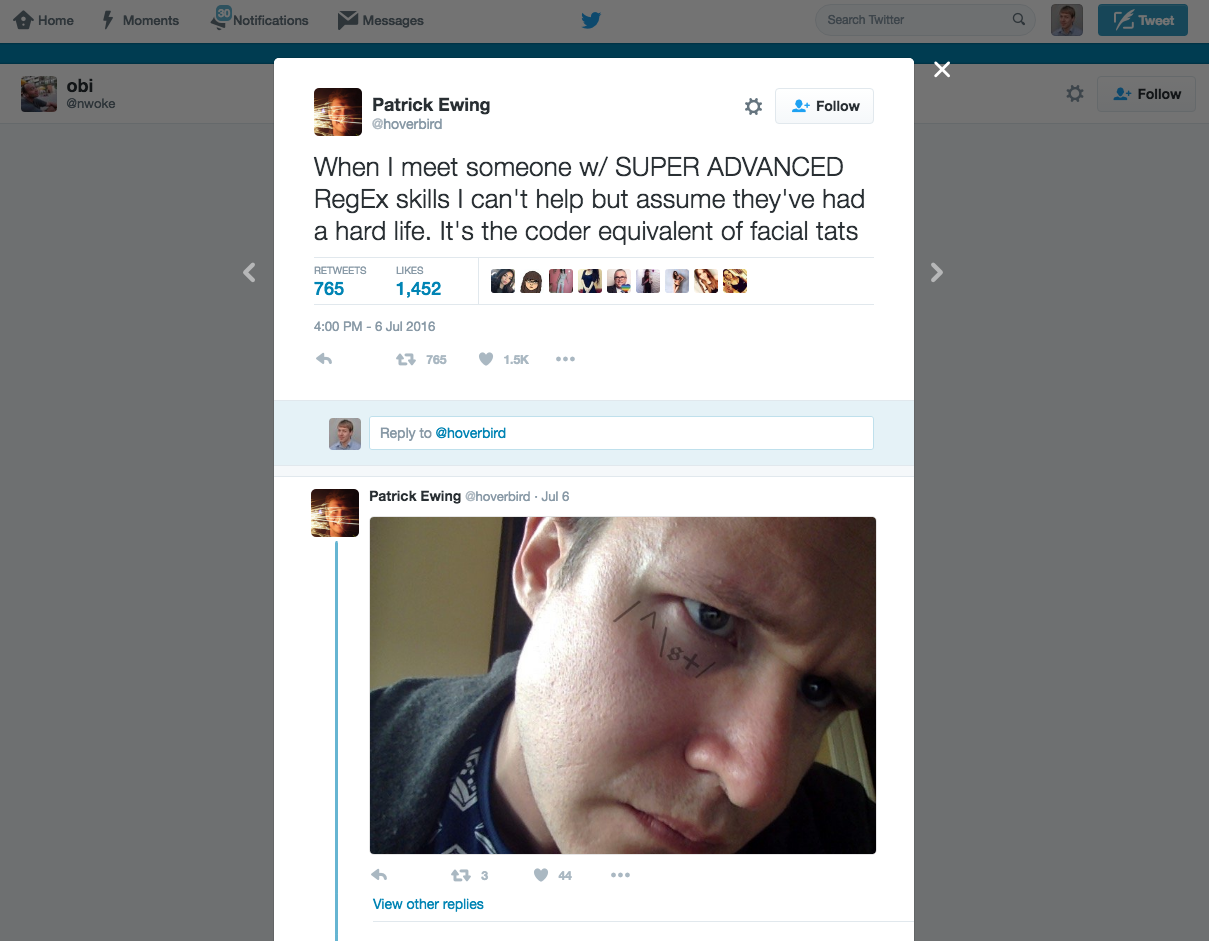

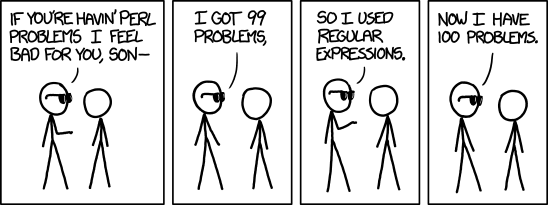

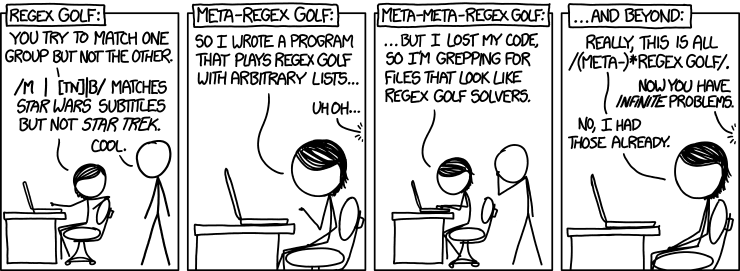

Regex can look really weird, since it's so concise¶

The best (only?) way to learn it is through practice. Otherwise, you feel like you're just reading lists of rules.

Let's take 15 minutes to begin working through the lessons on regexone.

Reminder: Be as specific as possible!

Pros & Cons of Regex¶

What are the advantages of regex?

- Concise and powerful pattern matching DSL

- Supported by many computer languages, including SQL

What are the disadvantages of regex?

- Brittle

- Hard to write, can get complex to be correct

- Hard to read

Revisiting tokenization¶

In the previous lessons, we used a tokenizer. Now, let's learn how we could do this ourselves, and get a better understanding of tokenization.

What if we needed to create our own tokens?

import re

re_punc = re.compile("([\"\''().,;:/_?!—\-])") # add spaces around punctuation

re_apos = re.compile(r"n ' t ") # n't

re_bpos = re.compile(r" ' s ") # 's

re_mult_space = re.compile(r" *") # replace multiple spaces with just one

def simple_toks(sent):

sent = re_punc.sub(r" \1 ", sent)

sent = re_apos.sub(r" n't ", sent)

sent = re_bpos.sub(r" 's ", sent)

sent = re_mult_space.sub(' ', sent)

return sent.lower().split()

text = "I don't know who Kara's new friend is-- is it 'Mr. Toad'?"

' '.join(simple_toks(text))

"i do n't know who kara 's new friend is - - is it ' mr . toad ' ?"

text2 = re_punc.sub(r" \1 ", text); text2

"I don ' t know who Kara ' s new friend is - - is it ' Mr . Toad ' ? "

text3 = re_apos.sub(r" n't ", text2); text3

"I do n't know who Kara ' s new friend is - - is it ' Mr . Toad ' ? "

text4 = re_bpos.sub(r" 's ", text3); text4

"I do n't know who Kara 's new friend is - - is it ' Mr . Toad ' ? "

re_mult_space.sub(' ', text4)

"I do n't know who Kara 's new friend is - - is it ' Mr . Toad ' ? "

sentences = ['All this happened, more or less.',

'The war parts, anyway, are pretty much true.',

"One guy I knew really was shot for taking a teapot that wasn't his.",

'Another guy I knew really did threaten to have his personal enemies killed by hired gunmen after the war.',

'And so on.',

"I've changed all their names."]

tokens = list(map(simple_toks, sentences))

tokens

[['all', 'this', 'happened', ',', 'more', 'or', 'less', '.'], ['the', 'war', 'parts', ',', 'anyway', ',', 'are', 'pretty', 'much', 'true', '.'], ['one', 'guy', 'i', 'knew', 'really', 'was', 'shot', 'for', 'taking', 'a', 'teapot', 'that', 'was', "n't", 'his', '.'], ['another', 'guy', 'i', 'knew', 'really', 'did', 'threaten', 'to', 'have', 'his', 'personal', 'enemies', 'killed', 'by', 'hired', 'gunmen', 'after', 'the', 'war', '.'], ['and', 'so', 'on', '.'], ['i', "'", 've', 'changed', 'all', 'their', 'names', '.']]

Once we have our tokens, we need to convert them to integer ids. We will also need to know our vocabulary, and have a way to convert between words and ids.

import collections

PAD = 0; SOS = 1

def toks2ids(sentences):

voc_cnt = collections.Counter(t for sent in sentences for t in sent)

vocab = sorted(voc_cnt, key=voc_cnt.get, reverse=True)

vocab.insert(PAD, "<PAD>")

vocab.insert(SOS, "<SOS>")

w2id = {w:i for i,w in enumerate(vocab)}

ids = [[w2id[t] for t in sent] for sent in sentences]

return ids, vocab, w2id, voc_cnt

ids, vocab, w2id, voc_cnt = toks2ids(tokens)

ids

[[5, 13, 14, 3, 15, 16, 17, 2], [6, 7, 18, 3, 19, 3, 20, 21, 22, 23, 2], [24, 8, 4, 9, 10, 11, 25, 26, 27, 28, 29, 30, 11, 31, 12, 2], [32, 8, 4, 9, 10, 33, 34, 35, 36, 12, 37, 38, 39, 40, 41, 42, 43, 6, 7, 2], [44, 45, 46, 2], [4, 47, 48, 49, 5, 50, 51, 2]]

vocab

['<PAD>', '<SOS>', '.', ',', 'i', 'all', 'the', 'war', 'guy', 'knew', 'really', 'was', 'his', 'this', 'happened', 'more', 'or', 'less', 'parts', 'anyway', 'are', 'pretty', 'much', 'true', 'one', 'shot', 'for', 'taking', 'a', 'teapot', 'that', "n't", 'another', 'did', 'threaten', 'to', 'have', 'personal', 'enemies', 'killed', 'by', 'hired', 'gunmen', 'after', 'and', 'so', 'on', "'", 've', 'changed', 'their', 'names']

Q: what could be another name of the vocab variable above?

w2id

{'<PAD>': 0,

'<SOS>': 1,

'.': 2,

',': 3,

'i': 4,

'all': 5,

'the': 6,

'war': 7,

'guy': 8,

'knew': 9,

'really': 10,

'was': 11,

'his': 12,

'this': 13,

'happened': 14,

'more': 15,

'or': 16,

'less': 17,

'parts': 18,

'anyway': 19,

'are': 20,

'pretty': 21,

'much': 22,

'true': 23,

'one': 24,

'shot': 25,

'for': 26,

'taking': 27,

'a': 28,

'teapot': 29,

'that': 30,

"n't": 31,

'another': 32,

'did': 33,

'threaten': 34,

'to': 35,

'have': 36,

'personal': 37,

'enemies': 38,

'killed': 39,

'by': 40,

'hired': 41,

'gunmen': 42,

'after': 43,

'and': 44,

'so': 45,

'on': 46,

"'": 47,

've': 48,

'changed': 49,

'their': 50,

'names': 51}

What are the uses of RegEx?¶

- Find / Search

- Find & Replace

- Cleaning

str.find?

Regex vs. String methods¶

- String methods are easier to understand.

- String methods express the intent more clearly.

- Regex handle much broader use cases.

- Regex can be language independent.

- Regex can be faster at scale.

What about unicode?¶

message = "😒🎦 🤢🍕"

re_frown = re.compile(r"😒|🤢")

re_frown.sub(r"😊", message)

Regex Errors:¶

False positives (Type I): Matching strings that we should not have matched

False negatives (Type II): Not matching strings that we should have matched

Reducing the error rate for a task often involves two antagonistic efforts:

- Minimizing false positives

- Minimizing false negatives

Important to have tests for both!

In a perfect world, you would be able to minimize both but in reality you often have to trade one for the other.

Useful Tools:¶

- Regex cheatsheet

- regexr.com Realtime regex engine

- pyregex.com Realtime Python regex engine

Summary¶

- We use regex as a metalanguage to find string patterns in blocks of text

r""are your IRL friends for Python regex- We are just doing binary classification so use the same performance metrics

- You'll make a lot of mistakes in regex 😩.

- False Positive: Thinking you are right but you are wrong

- False Negative: Missing something

---

Regex Terms¶

- target string: This term describes the string that we will be searching, that is, the string in which we want to find our match or search pattern.

- search expression: The pattern we use to find what we want. Most commonly called the regular expression.

- literal: A literal is any character we use in a search or matching expression, for example, to find 'ind' in 'windows' the 'ind' is a literal string - each character plays a part in the search, it is literally the string we want to find.

- metacharacter: A metacharacter is one or more special characters that have a unique meaning and are NOT used as literals in the search expression. For example "." means any character.

Metacharacters are the special sauce of regex.

- escape sequence: An escape sequence is a way of indicating that we want to use a metacharacters as a literal.

In a regular expression an escape sequence involves placing the metacharacter \ (backslash) in front of the metacharacter that we want to use as a literal.

'\.' means find literal period character (not match any character)

Regex Workflow¶

- Create pattern in Plain English

- Map to regex language

- Make sure results are correct:

- All Positives: Captures all examples of pattern

- No Negatives: Everything captured is from the pattern

- Don't over-engineer your regex.

- Your goal is to Get Stuff Done, not write the best regex in the world

- Filtering before and after are okay.