#!/usr/bin/env python

# coding: utf-8

# In this notebook, I want to wrap up some loose ends from last time.

# ## The two cultures

# This "debate" captures the tension between two approaches:

#

# - modeling the underlying mechanism of a phenomena

# - using machine learning to predict outputs (without necessarily understanding the mechanisms that create them)

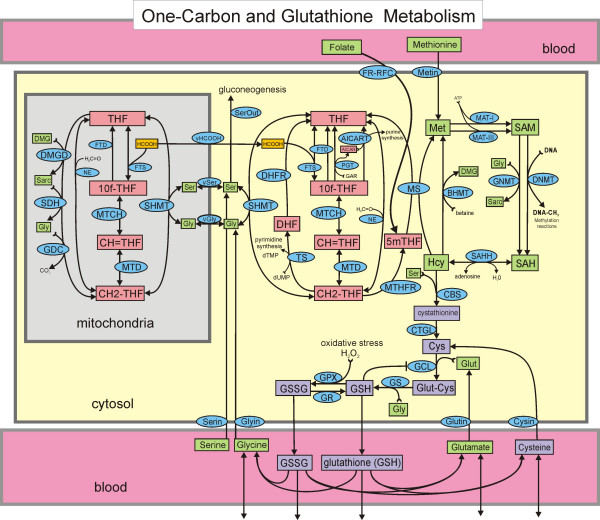

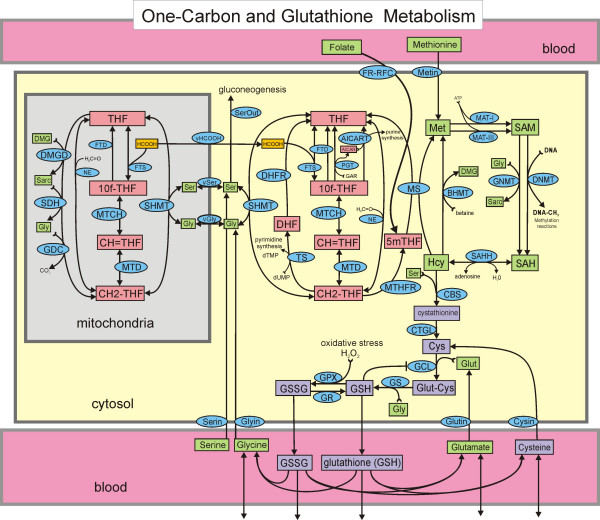

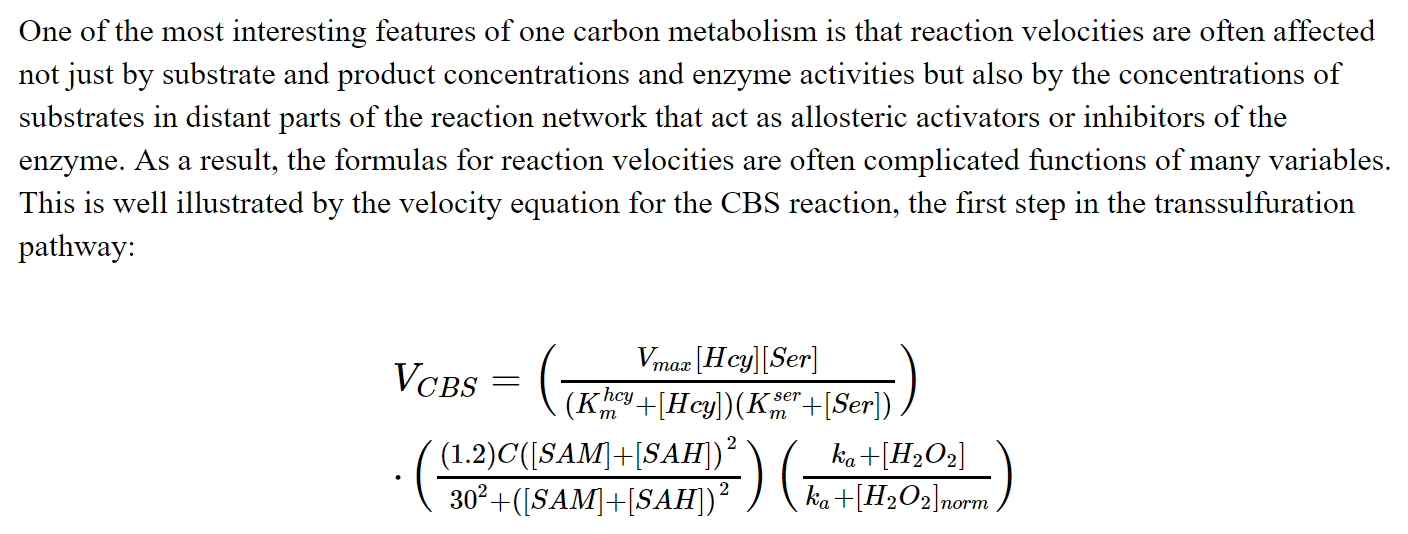

#  # I was part of a research project (in 2007) that involved manually coding each of the above reactions. We were determining if the final system could generate the same ouputs (in this case, levels in the blood of various substrates) as were observed in clinical studies.

#

# The equation for each reaction could be quite complex:

#

# I was part of a research project (in 2007) that involved manually coding each of the above reactions. We were determining if the final system could generate the same ouputs (in this case, levels in the blood of various substrates) as were observed in clinical studies.

#

# The equation for each reaction could be quite complex:

#  #

# This is an example of modeling the underlying mechanism, and is very different from a machine learning approach.

#

# Source: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2391141/

# ## The most popular word in each state

#

#

# This is an example of modeling the underlying mechanism, and is very different from a machine learning approach.

#

# Source: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2391141/

# ## The most popular word in each state

#  # A time to remove stop words

# ## Factorization is analgous to matrix decomposition

# ### With Integers

# Multiplication:

# $$2 * 2 * 3 * 3 * 2 * 2 \rightarrow 144$$

#

#

# A time to remove stop words

# ## Factorization is analgous to matrix decomposition

# ### With Integers

# Multiplication:

# $$2 * 2 * 3 * 3 * 2 * 2 \rightarrow 144$$

#

#  #

# Factorization is the “opposite” of multiplication:

# $$144 \rightarrow 2 * 2 * 3 * 3 * 2 * 2$$

#

# Here, the factors have the nice property of being prime.

#

# Prime factorization is much harder than multiplication (which is good, because it’s the heart of encryption).

# ### With Matrices

# Matrix decompositions are a way of taking matrices apart (the "opposite" of matrix multiplication).

#

# Similarly, we use matrix decompositions to come up with matrices with nice properties.

# Taking matrices apart is harder than putting them together.

#

# [One application](https://github.com/fastai/numerical-linear-algebra/blob/master/nbs/3.%20Background%20Removal%20with%20Robust%20PCA.ipynb):

#

#

#

# Factorization is the “opposite” of multiplication:

# $$144 \rightarrow 2 * 2 * 3 * 3 * 2 * 2$$

#

# Here, the factors have the nice property of being prime.

#

# Prime factorization is much harder than multiplication (which is good, because it’s the heart of encryption).

# ### With Matrices

# Matrix decompositions are a way of taking matrices apart (the "opposite" of matrix multiplication).

#

# Similarly, we use matrix decompositions to come up with matrices with nice properties.

# Taking matrices apart is harder than putting them together.

#

# [One application](https://github.com/fastai/numerical-linear-algebra/blob/master/nbs/3.%20Background%20Removal%20with%20Robust%20PCA.ipynb):

#

#  # What are the nice properties that matrices in an SVD decomposition have?

#

# $$A = USV$$

# ## Some Linear Algebra Review

# ### Matrix-vector multiplication

# $Ax = b$ takes a linear combination of the columns of $A$, using coefficients $x$

#

# http://matrixmultiplication.xyz/

# ### Matrix-matrix multiplication

# $A B = C$ each column of C is a linear combination of columns of A, where the coefficients come from the corresponding column of C

#

# What are the nice properties that matrices in an SVD decomposition have?

#

# $$A = USV$$

# ## Some Linear Algebra Review

# ### Matrix-vector multiplication

# $Ax = b$ takes a linear combination of the columns of $A$, using coefficients $x$

#

# http://matrixmultiplication.xyz/

# ### Matrix-matrix multiplication

# $A B = C$ each column of C is a linear combination of columns of A, where the coefficients come from the corresponding column of C

#  #

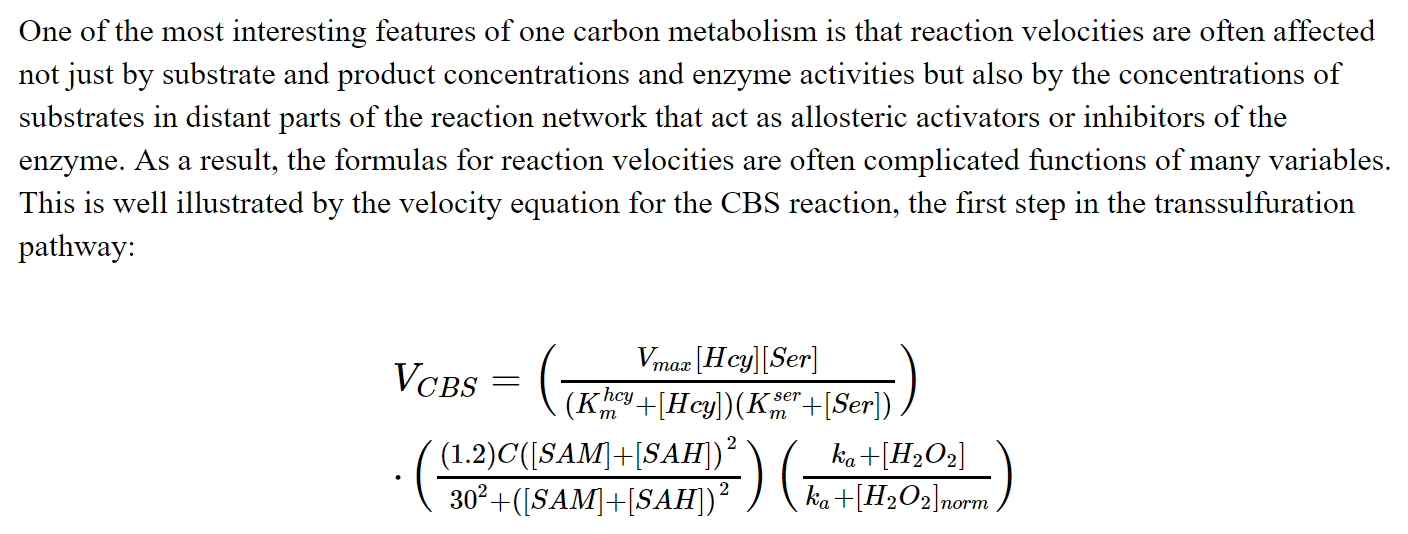

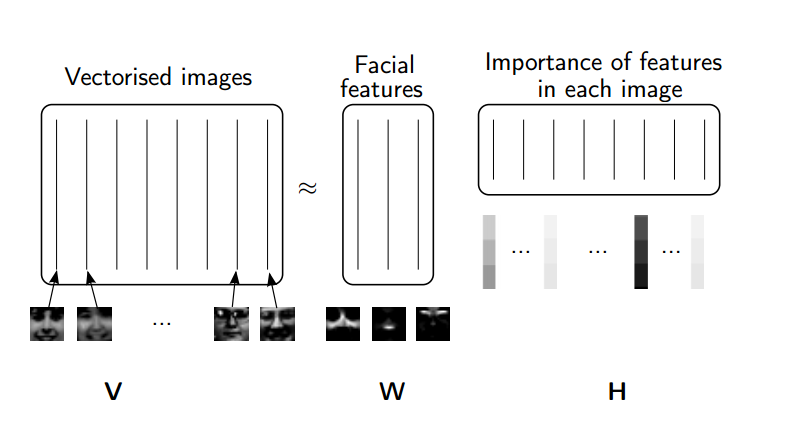

# (source: [NMF Tutorial](http://perso.telecom-paristech.fr/~essid/teach/NMF_tutorial_ICME-2014.pdf))

# ### Matrices as Transformations

# The 3Blue 1Brown [Essence of Linear Algebra](https://www.youtube.com/playlist?list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab) videos are fantastic. They give a much more visual & geometric perspective on linear algreba than how it is typically taught. These videos are a great resource if you are a linear algebra beginner, or feel uncomfortable or rusty with the material.

#

# Even if you are a linear algrebra pro, I still recommend these videos for a new perspective, and they are very well made.

# In[2]:

from IPython.display import YouTubeVideo

YouTubeVideo("kYB8IZa5AuE")

# ## British Literature SVD & NMF in Excel

# Data was downloaded from [here](https://de.dariah.eu/tatom/datasets.html)

#

# The code below was used to create the matrices which are displayed in the SVD and NMF of British Literature excel workbook. The data is intended to be viewed in Excel, I've just included the code here for thoroughness.

# ### Initializing, create document-term matrix

# In[2]:

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn import decomposition

from glob import glob

import os

# In[3]:

np.set_printoptions(suppress=True)

# In[46]:

filenames = []

for folder in ["british-fiction-corpus"]: #, "french-plays", "hugo-les-misérables"]:

filenames.extend(glob("data/literature/" + folder + "/*.txt"))

# In[47]:

len(filenames)

# In[134]:

vectorizer = TfidfVectorizer(input='filename', stop_words='english')

dtm = vectorizer.fit_transform(filenames).toarray()

vocab = np.array(vectorizer.get_feature_names())

dtm.shape, len(vocab)

# In[135]:

[f.split("/")[3] for f in filenames]

# ### NMF

# In[136]:

clf = decomposition.NMF(n_components=10, random_state=1)

W1 = clf.fit_transform(dtm)

H1 = clf.components_

# In[137]:

num_top_words=8

def show_topics(a):

top_words = lambda t: [vocab[i] for i in np.argsort(t)[:-num_top_words-1:-1]]

topic_words = ([top_words(t) for t in a])

return [' '.join(t) for t in topic_words]

# In[138]:

def get_all_topic_words(H):

top_indices = lambda t: {i for i in np.argsort(t)[:-num_top_words-1:-1]}

topic_indices = [top_indices(t) for t in H]

return sorted(set.union(*topic_indices))

# In[139]:

ind = get_all_topic_words(H1)

# In[140]:

vocab[ind]

# In[141]:

show_topics(H1)

# In[142]:

W1.shape, H1[:, ind].shape

# #### Export to CSVs

# In[72]:

from IPython.display import FileLink, FileLinks

# In[119]:

np.savetxt("britlit_W.csv", W1, delimiter=",", fmt='%.14f')

FileLink('britlit_W.csv')

# In[120]:

np.savetxt("britlit_H.csv", H1[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_H.csv')

# In[131]:

np.savetxt("britlit_raw.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw.csv')

# In[121]:

[str(word) for word in vocab[ind]]

# ### SVD

# In[143]:

U, s, V = decomposition.randomized_svd(dtm, 10)

# In[144]:

ind = get_all_topic_words(V)

# In[145]:

len(ind)

# In[146]:

vocab[ind]

# In[147]:

show_topics(H1)

# In[148]:

np.savetxt("britlit_U.csv", U, delimiter=",", fmt='%.14f')

FileLink('britlit_U.csv')

# In[149]:

np.savetxt("britlit_V.csv", V[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_V.csv')

# In[150]:

np.savetxt("britlit_raw_svd.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw_svd.csv')

# In[151]:

np.savetxt("britlit_S.csv", np.diag(s), delimiter=",", fmt='%.14f')

FileLink('britlit_S.csv')

# In[152]:

[str(word) for word in vocab[ind]]

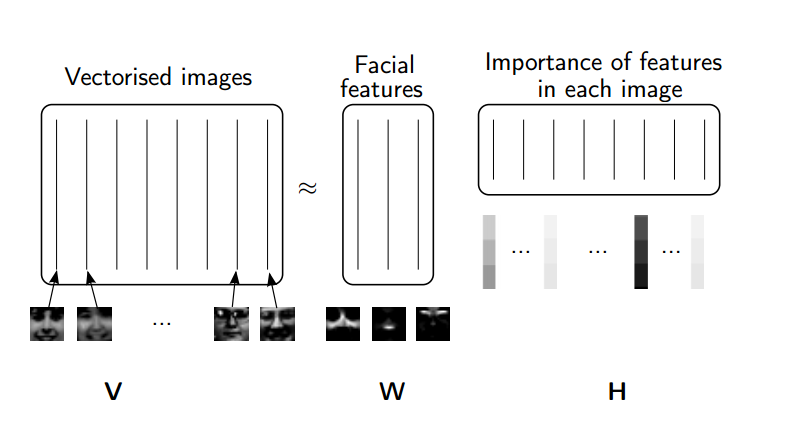

# ## Randomized SVD offers a speed up

#

#

# (source: [NMF Tutorial](http://perso.telecom-paristech.fr/~essid/teach/NMF_tutorial_ICME-2014.pdf))

# ### Matrices as Transformations

# The 3Blue 1Brown [Essence of Linear Algebra](https://www.youtube.com/playlist?list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab) videos are fantastic. They give a much more visual & geometric perspective on linear algreba than how it is typically taught. These videos are a great resource if you are a linear algebra beginner, or feel uncomfortable or rusty with the material.

#

# Even if you are a linear algrebra pro, I still recommend these videos for a new perspective, and they are very well made.

# In[2]:

from IPython.display import YouTubeVideo

YouTubeVideo("kYB8IZa5AuE")

# ## British Literature SVD & NMF in Excel

# Data was downloaded from [here](https://de.dariah.eu/tatom/datasets.html)

#

# The code below was used to create the matrices which are displayed in the SVD and NMF of British Literature excel workbook. The data is intended to be viewed in Excel, I've just included the code here for thoroughness.

# ### Initializing, create document-term matrix

# In[2]:

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn import decomposition

from glob import glob

import os

# In[3]:

np.set_printoptions(suppress=True)

# In[46]:

filenames = []

for folder in ["british-fiction-corpus"]: #, "french-plays", "hugo-les-misérables"]:

filenames.extend(glob("data/literature/" + folder + "/*.txt"))

# In[47]:

len(filenames)

# In[134]:

vectorizer = TfidfVectorizer(input='filename', stop_words='english')

dtm = vectorizer.fit_transform(filenames).toarray()

vocab = np.array(vectorizer.get_feature_names())

dtm.shape, len(vocab)

# In[135]:

[f.split("/")[3] for f in filenames]

# ### NMF

# In[136]:

clf = decomposition.NMF(n_components=10, random_state=1)

W1 = clf.fit_transform(dtm)

H1 = clf.components_

# In[137]:

num_top_words=8

def show_topics(a):

top_words = lambda t: [vocab[i] for i in np.argsort(t)[:-num_top_words-1:-1]]

topic_words = ([top_words(t) for t in a])

return [' '.join(t) for t in topic_words]

# In[138]:

def get_all_topic_words(H):

top_indices = lambda t: {i for i in np.argsort(t)[:-num_top_words-1:-1]}

topic_indices = [top_indices(t) for t in H]

return sorted(set.union(*topic_indices))

# In[139]:

ind = get_all_topic_words(H1)

# In[140]:

vocab[ind]

# In[141]:

show_topics(H1)

# In[142]:

W1.shape, H1[:, ind].shape

# #### Export to CSVs

# In[72]:

from IPython.display import FileLink, FileLinks

# In[119]:

np.savetxt("britlit_W.csv", W1, delimiter=",", fmt='%.14f')

FileLink('britlit_W.csv')

# In[120]:

np.savetxt("britlit_H.csv", H1[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_H.csv')

# In[131]:

np.savetxt("britlit_raw.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw.csv')

# In[121]:

[str(word) for word in vocab[ind]]

# ### SVD

# In[143]:

U, s, V = decomposition.randomized_svd(dtm, 10)

# In[144]:

ind = get_all_topic_words(V)

# In[145]:

len(ind)

# In[146]:

vocab[ind]

# In[147]:

show_topics(H1)

# In[148]:

np.savetxt("britlit_U.csv", U, delimiter=",", fmt='%.14f')

FileLink('britlit_U.csv')

# In[149]:

np.savetxt("britlit_V.csv", V[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_V.csv')

# In[150]:

np.savetxt("britlit_raw_svd.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw_svd.csv')

# In[151]:

np.savetxt("britlit_S.csv", np.diag(s), delimiter=",", fmt='%.14f')

FileLink('britlit_S.csv')

# In[152]:

[str(word) for word in vocab[ind]]

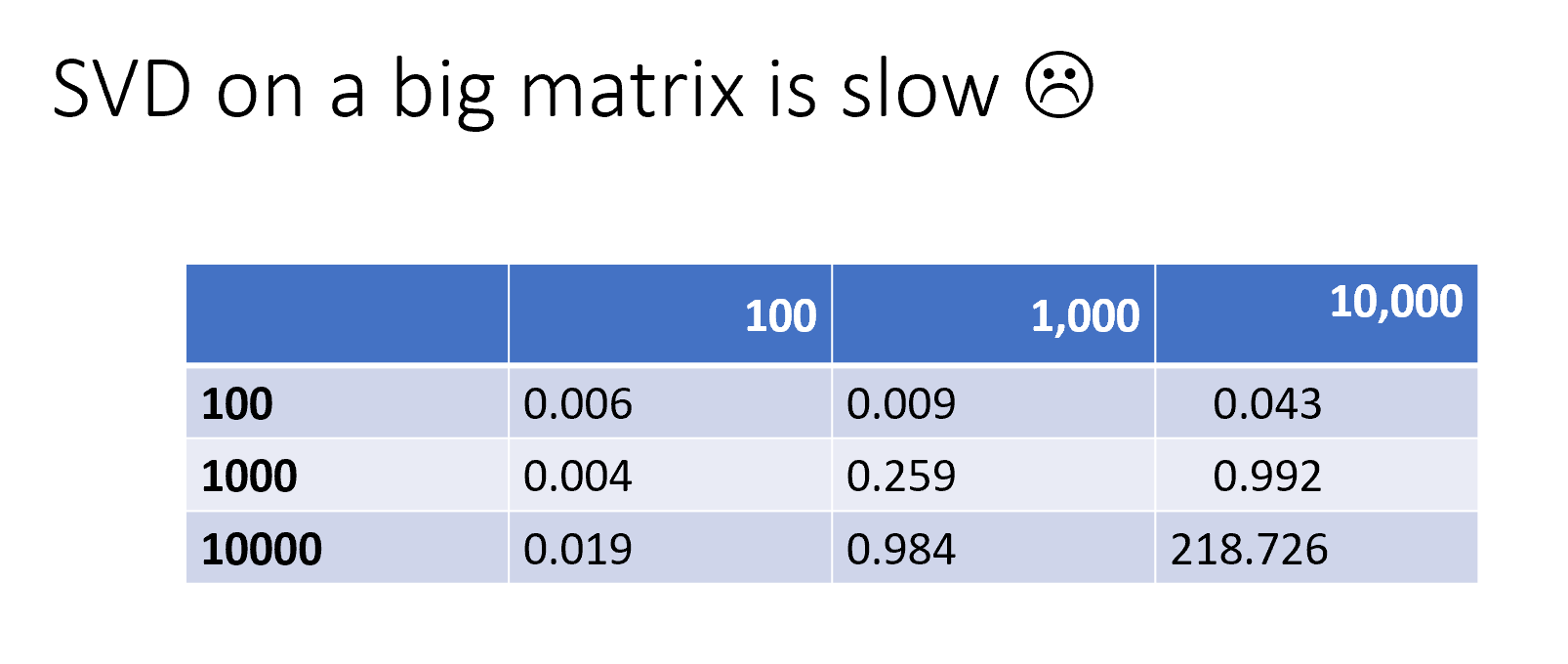

# ## Randomized SVD offers a speed up

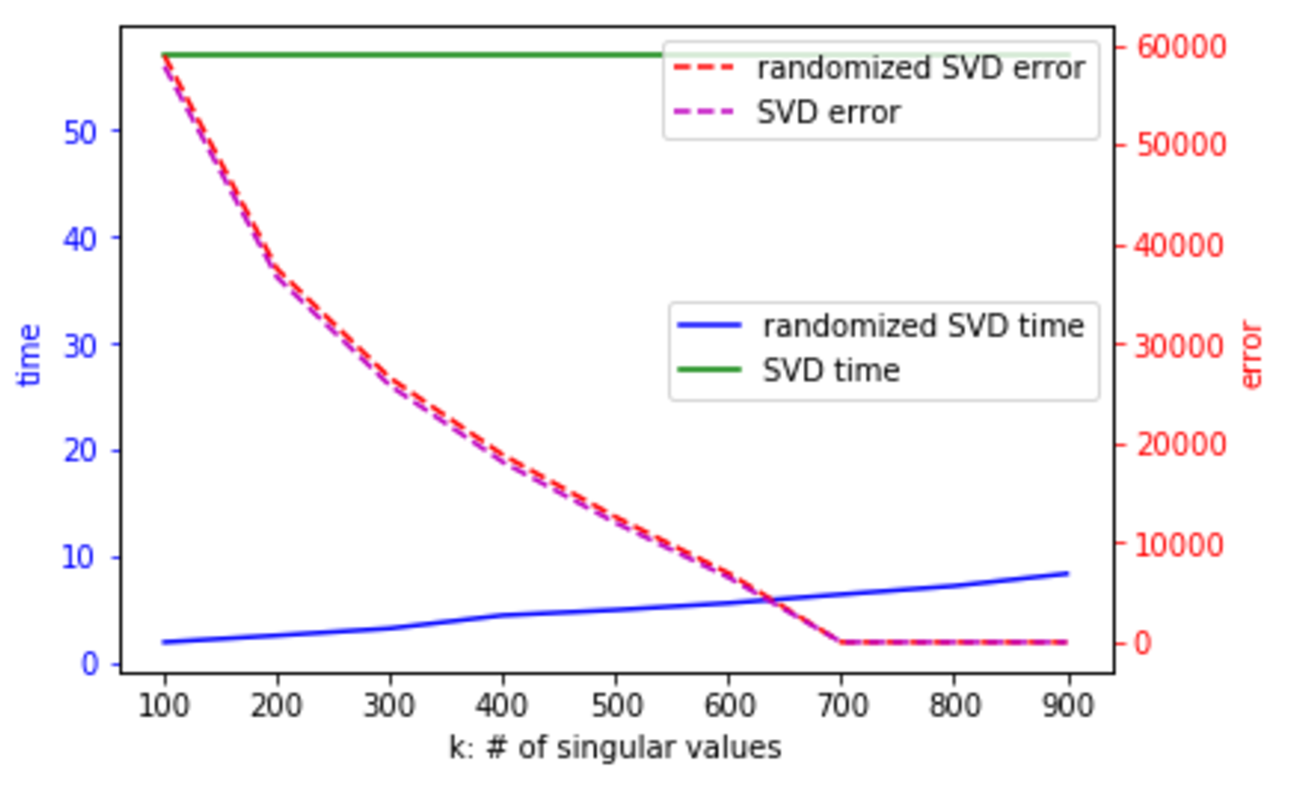

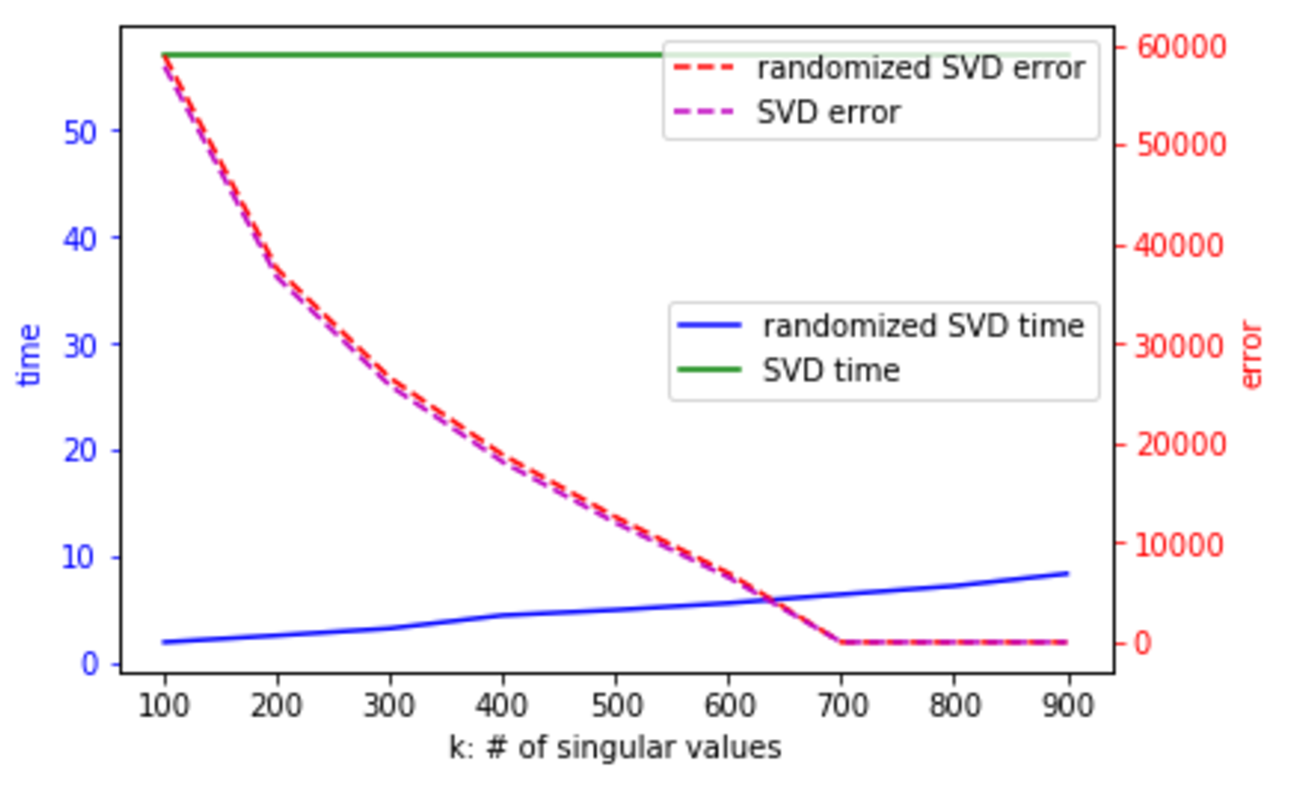

#  # One way to address this is to use randomized SVD. In the below chart, the error is the difference between A - U * S * V, that is, what you've failed to capture in your decomposition:

#

#

# One way to address this is to use randomized SVD. In the below chart, the error is the difference between A - U * S * V, that is, what you've failed to capture in your decomposition:

#

#  # For more on randomized SVD, check out my [PyBay 2017 talk](https://www.youtube.com/watch?v=7i6kBz1kZ-A&list=PLtmWHNX-gukLQlMvtRJ19s7-8MrnRV6h6&index=7).

#

# For significantly more on randomized SVD, check out the [Computational Linear Algebra course](https://github.com/fastai/numerical-linear-algebra).

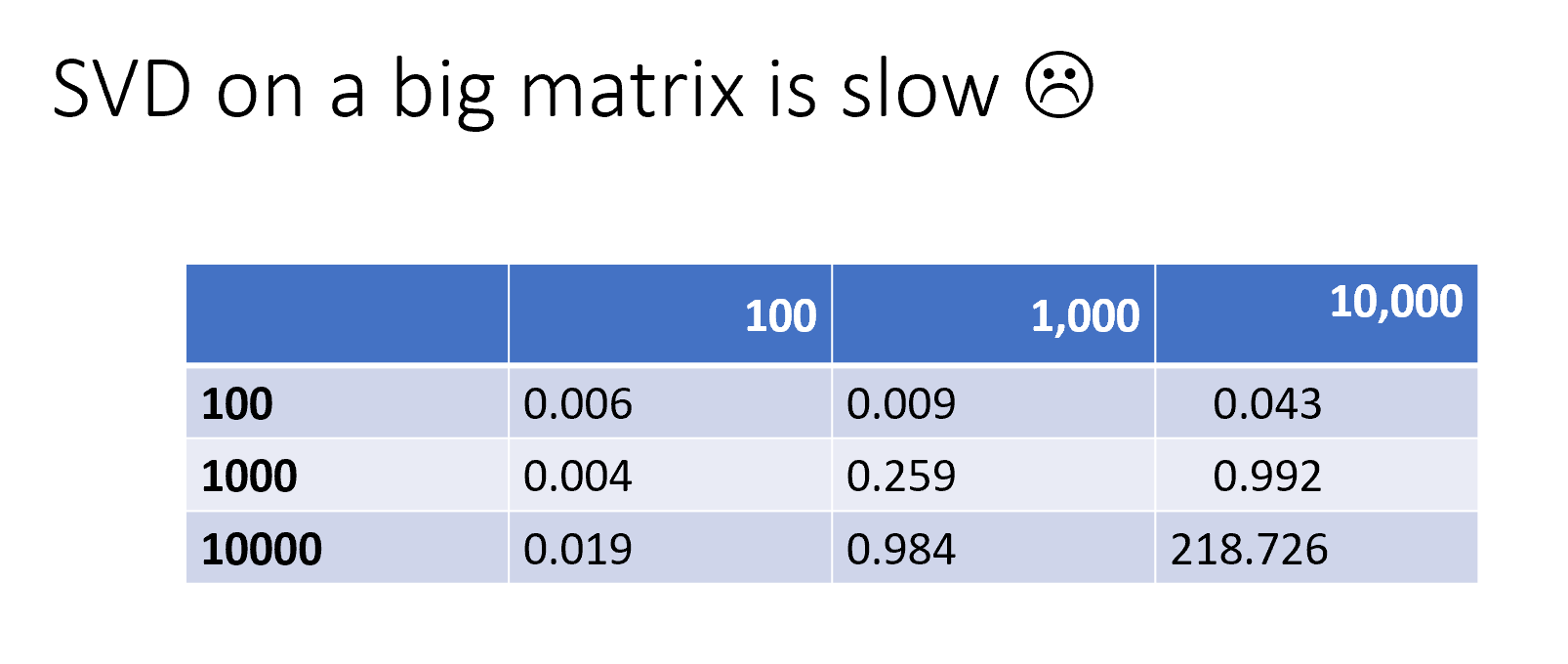

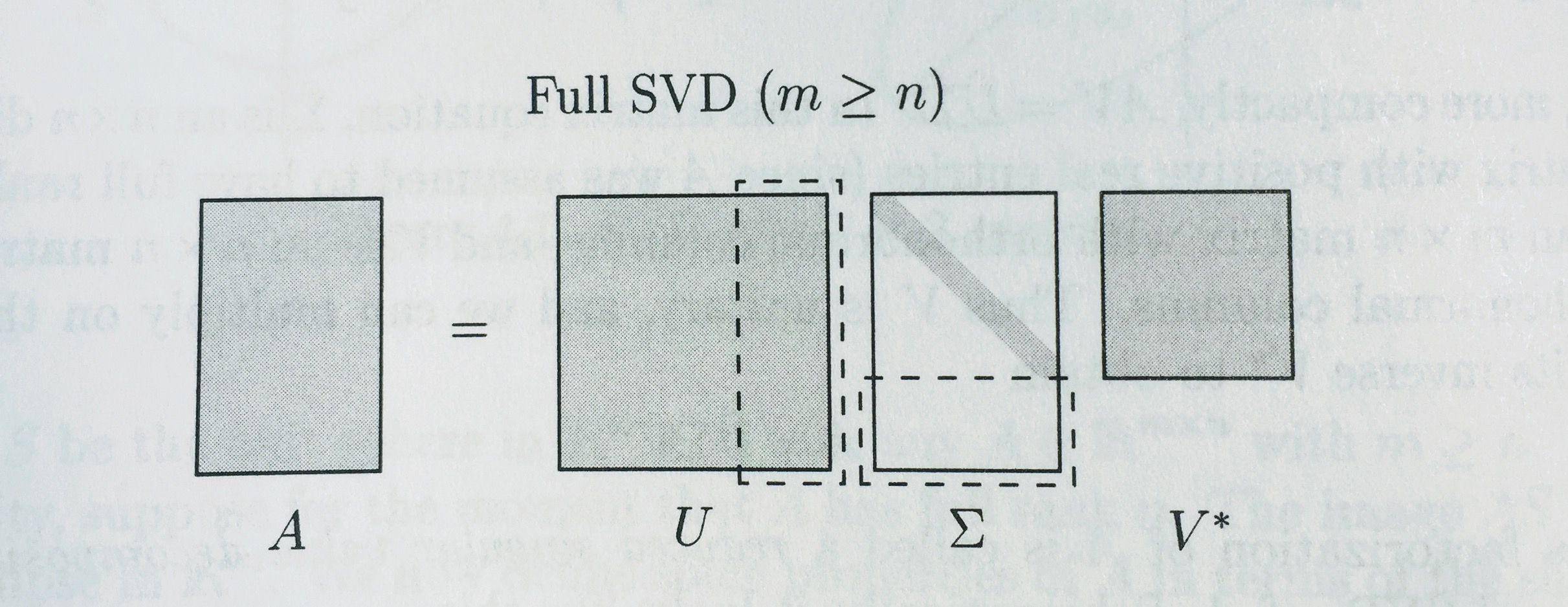

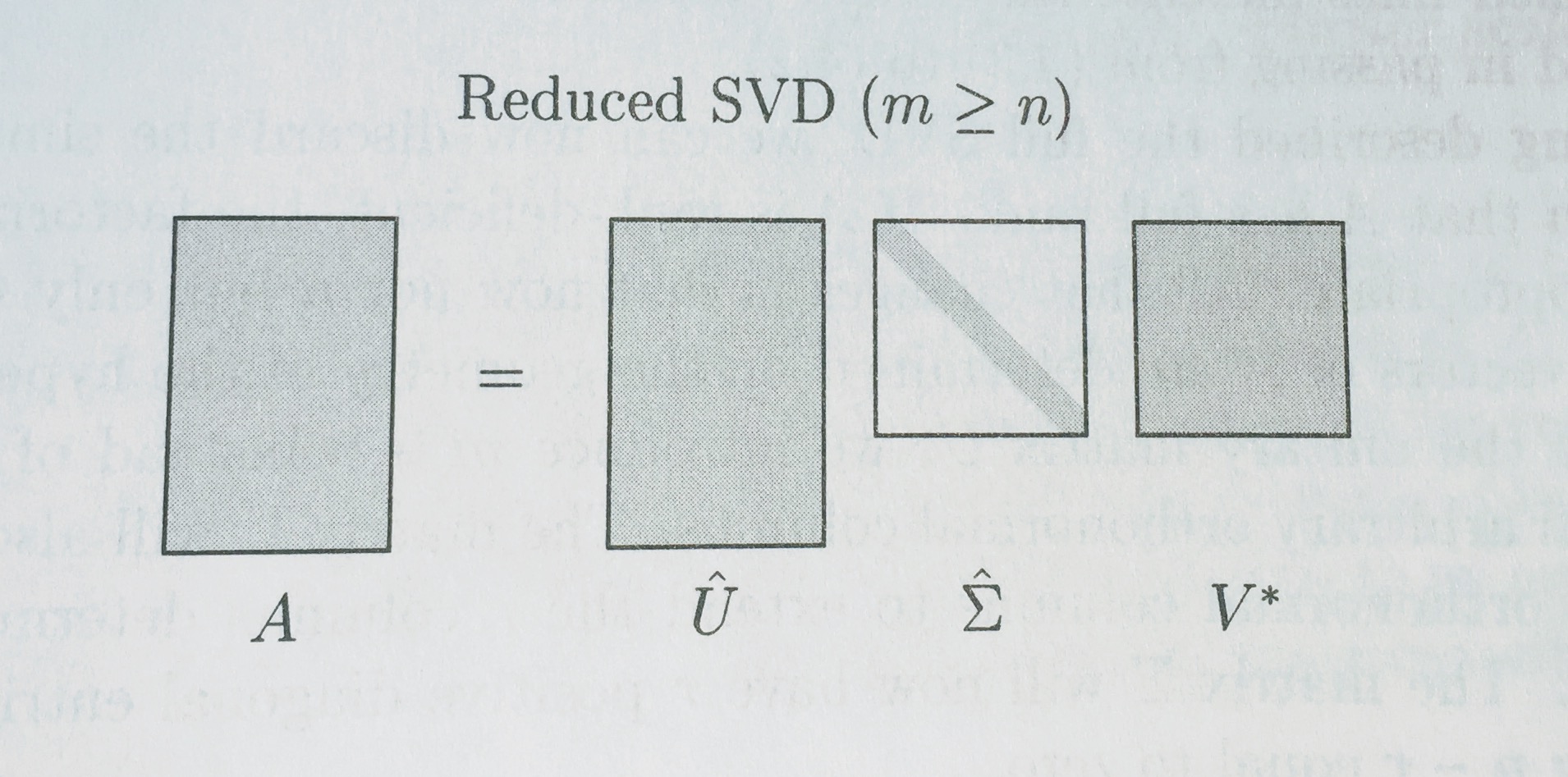

# ## Full vs Reduced SVD

# Remember how we were calling `np.linalg.svd(vectors, full_matrices=False)`? We set `full_matrices=False` to calculate the reduced SVD. For the full SVD, both U and V are **square** matrices, where the extra columns in U form an orthonormal basis (but zero out when multiplied by extra rows of zeros in S).

# Diagrams from Trefethen:

#

#

# For more on randomized SVD, check out my [PyBay 2017 talk](https://www.youtube.com/watch?v=7i6kBz1kZ-A&list=PLtmWHNX-gukLQlMvtRJ19s7-8MrnRV6h6&index=7).

#

# For significantly more on randomized SVD, check out the [Computational Linear Algebra course](https://github.com/fastai/numerical-linear-algebra).

# ## Full vs Reduced SVD

# Remember how we were calling `np.linalg.svd(vectors, full_matrices=False)`? We set `full_matrices=False` to calculate the reduced SVD. For the full SVD, both U and V are **square** matrices, where the extra columns in U form an orthonormal basis (but zero out when multiplied by extra rows of zeros in S).

# Diagrams from Trefethen:

#

#  #

#

#

#

# I was part of a research project (in 2007) that involved manually coding each of the above reactions. We were determining if the final system could generate the same ouputs (in this case, levels in the blood of various substrates) as were observed in clinical studies.

#

# The equation for each reaction could be quite complex:

#

# I was part of a research project (in 2007) that involved manually coding each of the above reactions. We were determining if the final system could generate the same ouputs (in this case, levels in the blood of various substrates) as were observed in clinical studies.

#

# The equation for each reaction could be quite complex:

#  #

# This is an example of modeling the underlying mechanism, and is very different from a machine learning approach.

#

# Source: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2391141/

# ## The most popular word in each state

#

#

# This is an example of modeling the underlying mechanism, and is very different from a machine learning approach.

#

# Source: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2391141/

# ## The most popular word in each state

#  # A time to remove stop words

# ## Factorization is analgous to matrix decomposition

# ### With Integers

# Multiplication:

# $$2 * 2 * 3 * 3 * 2 * 2 \rightarrow 144$$

#

#

# A time to remove stop words

# ## Factorization is analgous to matrix decomposition

# ### With Integers

# Multiplication:

# $$2 * 2 * 3 * 3 * 2 * 2 \rightarrow 144$$

#

#  #

# Factorization is the “opposite” of multiplication:

# $$144 \rightarrow 2 * 2 * 3 * 3 * 2 * 2$$

#

# Here, the factors have the nice property of being prime.

#

# Prime factorization is much harder than multiplication (which is good, because it’s the heart of encryption).

# ### With Matrices

# Matrix decompositions are a way of taking matrices apart (the "opposite" of matrix multiplication).

#

# Similarly, we use matrix decompositions to come up with matrices with nice properties.

# Taking matrices apart is harder than putting them together.

#

# [One application](https://github.com/fastai/numerical-linear-algebra/blob/master/nbs/3.%20Background%20Removal%20with%20Robust%20PCA.ipynb):

#

#

#

# Factorization is the “opposite” of multiplication:

# $$144 \rightarrow 2 * 2 * 3 * 3 * 2 * 2$$

#

# Here, the factors have the nice property of being prime.

#

# Prime factorization is much harder than multiplication (which is good, because it’s the heart of encryption).

# ### With Matrices

# Matrix decompositions are a way of taking matrices apart (the "opposite" of matrix multiplication).

#

# Similarly, we use matrix decompositions to come up with matrices with nice properties.

# Taking matrices apart is harder than putting them together.

#

# [One application](https://github.com/fastai/numerical-linear-algebra/blob/master/nbs/3.%20Background%20Removal%20with%20Robust%20PCA.ipynb):

#

#  # What are the nice properties that matrices in an SVD decomposition have?

#

# $$A = USV$$

# ## Some Linear Algebra Review

# ### Matrix-vector multiplication

# $Ax = b$ takes a linear combination of the columns of $A$, using coefficients $x$

#

# http://matrixmultiplication.xyz/

# ### Matrix-matrix multiplication

# $A B = C$ each column of C is a linear combination of columns of A, where the coefficients come from the corresponding column of C

#

# What are the nice properties that matrices in an SVD decomposition have?

#

# $$A = USV$$

# ## Some Linear Algebra Review

# ### Matrix-vector multiplication

# $Ax = b$ takes a linear combination of the columns of $A$, using coefficients $x$

#

# http://matrixmultiplication.xyz/

# ### Matrix-matrix multiplication

# $A B = C$ each column of C is a linear combination of columns of A, where the coefficients come from the corresponding column of C

#  #

# (source: [NMF Tutorial](http://perso.telecom-paristech.fr/~essid/teach/NMF_tutorial_ICME-2014.pdf))

# ### Matrices as Transformations

# The 3Blue 1Brown [Essence of Linear Algebra](https://www.youtube.com/playlist?list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab) videos are fantastic. They give a much more visual & geometric perspective on linear algreba than how it is typically taught. These videos are a great resource if you are a linear algebra beginner, or feel uncomfortable or rusty with the material.

#

# Even if you are a linear algrebra pro, I still recommend these videos for a new perspective, and they are very well made.

# In[2]:

from IPython.display import YouTubeVideo

YouTubeVideo("kYB8IZa5AuE")

# ## British Literature SVD & NMF in Excel

# Data was downloaded from [here](https://de.dariah.eu/tatom/datasets.html)

#

# The code below was used to create the matrices which are displayed in the SVD and NMF of British Literature excel workbook. The data is intended to be viewed in Excel, I've just included the code here for thoroughness.

# ### Initializing, create document-term matrix

# In[2]:

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn import decomposition

from glob import glob

import os

# In[3]:

np.set_printoptions(suppress=True)

# In[46]:

filenames = []

for folder in ["british-fiction-corpus"]: #, "french-plays", "hugo-les-misérables"]:

filenames.extend(glob("data/literature/" + folder + "/*.txt"))

# In[47]:

len(filenames)

# In[134]:

vectorizer = TfidfVectorizer(input='filename', stop_words='english')

dtm = vectorizer.fit_transform(filenames).toarray()

vocab = np.array(vectorizer.get_feature_names())

dtm.shape, len(vocab)

# In[135]:

[f.split("/")[3] for f in filenames]

# ### NMF

# In[136]:

clf = decomposition.NMF(n_components=10, random_state=1)

W1 = clf.fit_transform(dtm)

H1 = clf.components_

# In[137]:

num_top_words=8

def show_topics(a):

top_words = lambda t: [vocab[i] for i in np.argsort(t)[:-num_top_words-1:-1]]

topic_words = ([top_words(t) for t in a])

return [' '.join(t) for t in topic_words]

# In[138]:

def get_all_topic_words(H):

top_indices = lambda t: {i for i in np.argsort(t)[:-num_top_words-1:-1]}

topic_indices = [top_indices(t) for t in H]

return sorted(set.union(*topic_indices))

# In[139]:

ind = get_all_topic_words(H1)

# In[140]:

vocab[ind]

# In[141]:

show_topics(H1)

# In[142]:

W1.shape, H1[:, ind].shape

# #### Export to CSVs

# In[72]:

from IPython.display import FileLink, FileLinks

# In[119]:

np.savetxt("britlit_W.csv", W1, delimiter=",", fmt='%.14f')

FileLink('britlit_W.csv')

# In[120]:

np.savetxt("britlit_H.csv", H1[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_H.csv')

# In[131]:

np.savetxt("britlit_raw.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw.csv')

# In[121]:

[str(word) for word in vocab[ind]]

# ### SVD

# In[143]:

U, s, V = decomposition.randomized_svd(dtm, 10)

# In[144]:

ind = get_all_topic_words(V)

# In[145]:

len(ind)

# In[146]:

vocab[ind]

# In[147]:

show_topics(H1)

# In[148]:

np.savetxt("britlit_U.csv", U, delimiter=",", fmt='%.14f')

FileLink('britlit_U.csv')

# In[149]:

np.savetxt("britlit_V.csv", V[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_V.csv')

# In[150]:

np.savetxt("britlit_raw_svd.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw_svd.csv')

# In[151]:

np.savetxt("britlit_S.csv", np.diag(s), delimiter=",", fmt='%.14f')

FileLink('britlit_S.csv')

# In[152]:

[str(word) for word in vocab[ind]]

# ## Randomized SVD offers a speed up

#

#

# (source: [NMF Tutorial](http://perso.telecom-paristech.fr/~essid/teach/NMF_tutorial_ICME-2014.pdf))

# ### Matrices as Transformations

# The 3Blue 1Brown [Essence of Linear Algebra](https://www.youtube.com/playlist?list=PLZHQObOWTQDPD3MizzM2xVFitgF8hE_ab) videos are fantastic. They give a much more visual & geometric perspective on linear algreba than how it is typically taught. These videos are a great resource if you are a linear algebra beginner, or feel uncomfortable or rusty with the material.

#

# Even if you are a linear algrebra pro, I still recommend these videos for a new perspective, and they are very well made.

# In[2]:

from IPython.display import YouTubeVideo

YouTubeVideo("kYB8IZa5AuE")

# ## British Literature SVD & NMF in Excel

# Data was downloaded from [here](https://de.dariah.eu/tatom/datasets.html)

#

# The code below was used to create the matrices which are displayed in the SVD and NMF of British Literature excel workbook. The data is intended to be viewed in Excel, I've just included the code here for thoroughness.

# ### Initializing, create document-term matrix

# In[2]:

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn import decomposition

from glob import glob

import os

# In[3]:

np.set_printoptions(suppress=True)

# In[46]:

filenames = []

for folder in ["british-fiction-corpus"]: #, "french-plays", "hugo-les-misérables"]:

filenames.extend(glob("data/literature/" + folder + "/*.txt"))

# In[47]:

len(filenames)

# In[134]:

vectorizer = TfidfVectorizer(input='filename', stop_words='english')

dtm = vectorizer.fit_transform(filenames).toarray()

vocab = np.array(vectorizer.get_feature_names())

dtm.shape, len(vocab)

# In[135]:

[f.split("/")[3] for f in filenames]

# ### NMF

# In[136]:

clf = decomposition.NMF(n_components=10, random_state=1)

W1 = clf.fit_transform(dtm)

H1 = clf.components_

# In[137]:

num_top_words=8

def show_topics(a):

top_words = lambda t: [vocab[i] for i in np.argsort(t)[:-num_top_words-1:-1]]

topic_words = ([top_words(t) for t in a])

return [' '.join(t) for t in topic_words]

# In[138]:

def get_all_topic_words(H):

top_indices = lambda t: {i for i in np.argsort(t)[:-num_top_words-1:-1]}

topic_indices = [top_indices(t) for t in H]

return sorted(set.union(*topic_indices))

# In[139]:

ind = get_all_topic_words(H1)

# In[140]:

vocab[ind]

# In[141]:

show_topics(H1)

# In[142]:

W1.shape, H1[:, ind].shape

# #### Export to CSVs

# In[72]:

from IPython.display import FileLink, FileLinks

# In[119]:

np.savetxt("britlit_W.csv", W1, delimiter=",", fmt='%.14f')

FileLink('britlit_W.csv')

# In[120]:

np.savetxt("britlit_H.csv", H1[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_H.csv')

# In[131]:

np.savetxt("britlit_raw.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw.csv')

# In[121]:

[str(word) for word in vocab[ind]]

# ### SVD

# In[143]:

U, s, V = decomposition.randomized_svd(dtm, 10)

# In[144]:

ind = get_all_topic_words(V)

# In[145]:

len(ind)

# In[146]:

vocab[ind]

# In[147]:

show_topics(H1)

# In[148]:

np.savetxt("britlit_U.csv", U, delimiter=",", fmt='%.14f')

FileLink('britlit_U.csv')

# In[149]:

np.savetxt("britlit_V.csv", V[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_V.csv')

# In[150]:

np.savetxt("britlit_raw_svd.csv", dtm[:,ind], delimiter=",", fmt='%.14f')

FileLink('britlit_raw_svd.csv')

# In[151]:

np.savetxt("britlit_S.csv", np.diag(s), delimiter=",", fmt='%.14f')

FileLink('britlit_S.csv')

# In[152]:

[str(word) for word in vocab[ind]]

# ## Randomized SVD offers a speed up

#  # One way to address this is to use randomized SVD. In the below chart, the error is the difference between A - U * S * V, that is, what you've failed to capture in your decomposition:

#

#

# One way to address this is to use randomized SVD. In the below chart, the error is the difference between A - U * S * V, that is, what you've failed to capture in your decomposition:

#

#  # For more on randomized SVD, check out my [PyBay 2017 talk](https://www.youtube.com/watch?v=7i6kBz1kZ-A&list=PLtmWHNX-gukLQlMvtRJ19s7-8MrnRV6h6&index=7).

#

# For significantly more on randomized SVD, check out the [Computational Linear Algebra course](https://github.com/fastai/numerical-linear-algebra).

# ## Full vs Reduced SVD

# Remember how we were calling `np.linalg.svd(vectors, full_matrices=False)`? We set `full_matrices=False` to calculate the reduced SVD. For the full SVD, both U and V are **square** matrices, where the extra columns in U form an orthonormal basis (but zero out when multiplied by extra rows of zeros in S).

# Diagrams from Trefethen:

#

#

# For more on randomized SVD, check out my [PyBay 2017 talk](https://www.youtube.com/watch?v=7i6kBz1kZ-A&list=PLtmWHNX-gukLQlMvtRJ19s7-8MrnRV6h6&index=7).

#

# For significantly more on randomized SVD, check out the [Computational Linear Algebra course](https://github.com/fastai/numerical-linear-algebra).

# ## Full vs Reduced SVD

# Remember how we were calling `np.linalg.svd(vectors, full_matrices=False)`? We set `full_matrices=False` to calculate the reduced SVD. For the full SVD, both U and V are **square** matrices, where the extra columns in U form an orthonormal basis (but zero out when multiplied by extra rows of zeros in S).

# Diagrams from Trefethen:

#

#